Introduction

When you initialize your RASA project, it provides you with out of the box suggested NLU config.

Which works perfectly fine when you are just experimenting with the framework.

But once you start looking for more advanced options to optimize your RASA model training process, it’s likely you will need to tune your config.yml file.

The config.yml file can be divided into two parts, the first one is for the NLU pipeline and the second part is to define the policies of RASA core.

In this article, we will discuss RASA NLU pipeline, its various components, their configurations and more.

NLU Pipeline

The performance of the classifier relies generally upon the NLU pipeline. In this way, you need to minister your NLU pipeline as per your training dataset. For instance, on the off chance that your dataset has less training examples, at that point, you may need to utilize pre-trained tokenizers like SpacyTokenizer, ConveRTTokenizer. The decision of tokenizer may likewise influence the kind of featurizers. The parts like RegexFeaturizer can be utilized to extricate certain regex examples and query table qualities. Also, DucklingHTTPExtractor can be utilized to extract entities other than the entities you have set apart in your dataset, it tends to be utilized for entities like dates, amounts of cash, distance, and so on.

Neither duckling nor spacy expects you to explain your dataset. Other tuning parameters like epochs, the number of transformer layers, turning on/off entity recognition, embedding dimension, weight sparsity, etc, and so forth can be arranged under the component DIETClassifier (Dual Intent and Entity Transformer).

If your assistant needs to converse only in spaCy’s supported languages, it is recommended to use the following pipeline:

language: "fr" # your two-letter language code

pipeline:

- name: SpacyNLP

- name: SpacyTokenizer

- name: SpacyFeaturizer

- name: RegexFeaturizer

- name: LexicalSyntacticFeaturizer

- name: CountVectorsFeaturizer

analyzer: "char_wb"

min_ngram: 1

max_ngram: 4

- name: DIETClassifier

epochs: 100

- name: EntitySynonymMapper

- name: ResponseSelector

epochs: 100

spaCy provides language specific pre trained word embeddings. This really helps when you have low training data. These embeddings are helpful as they already have some kind of linguistic knowledge. For example words that are similar in meaning will have embedding closer to each other. This helps the assistant to give higher accuracy with less training data.

However, if your assistant language is not supported by spaCy or the assistant is very domain specific spaCy’s embedding will not be useful.

Let’s say you are creating a financial assistant.

It will need to be trained on data with a lot of financial terms, abbreviations etc.

In this case, it is recommended to use the following pipeline:

pipeline:

- name: WhitespaceTokenizer

- name: RegexFeaturizer

- name: LexicalSyntacticFeaturizer

- name: CountVectorsFeaturizer

analyzer: "char_wb"

min_ngram: 1

max_ngram: 4

- name: DIETClassifier

epochs: 100

- name: EntitySynonymMapper

- name: ResponseSelector

epochs: 100

This pipeline will create vectors for only the words that are part of the training data. Also important thing to note, this pipeline can work for any language or domain, only condition is that the words should be separated by space. This creates a problem for languages like “Lao”, since in these languages the words are actually written in a continuous fashion and not separated by space. RASA supports custom components to handle these problems and also to support any other specific requirements that you would like to be part of your pipeline. We will discuss it in detail during the later part of this article.

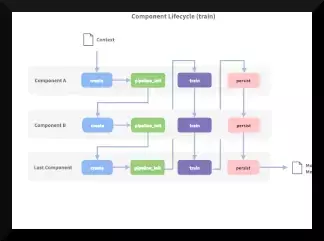

For instance, you can’t have featurizers before the tokenizers on the grounds that the yield of the first part goes about as the contribution for the accompanying component.

So, you need to plug and play with various parts and change their setup to locate the ideal pipeline for your dataset. It is likewise conceivable that with the expansion of new information throughout the timeframe, you may need to again change the NLU pipeline. You can follow the Rasa docs and Rasa gatherings for additional subtleties and examples of NLU pipelines.

Custom NLU pipeline Components

Rasa NLU permits making a custom Component to play out a particular task which NLU doesn’t presently offer. Undertakings like lemmatization, sentiment analysis, tokenization for dialects like “Lao” which we talked about before, can be done here.

Let’s add a custom component to our pipeline which does lemmatization. Now, lemmatization is where the words in the text are replaced by their base form. For example:

- “they” →” -PRON-” (pronoun)

- “are” → “be”

- “looking” →”look”

- “for” → “for”

- “new” → “new”

- “laptops” → “laptop”

Check out the below code which is based on Rasa NLU spacy_tokenizer.py where text is replaced by lemma_

import typing

from typing import Any

from rasa_nlu.components import Component

from rasa_nlu.config import RasaNLUModelConfig

from rasa_nlu.tokenizers import Token, Tokenizer

from rasa_nlu.training_data import Message, TrainingData

if typing.TYPE_CHECKING:

from spacy.tokens.doc import Doc

class Lemmatizer(Tokenizer, Component):

name = "spacy_lemma"

provides = ["tokens"]

requires = ["spacy_doc"]

def train(self,

training_data: TrainingData,

config: RasaNLUModelConfig,

**kwargs: Any)-> None:

for example in training_data.training_examples:

example.set("tokens", self.tokenize(example.get("spacy_doc")))

def process(self, message: Message, **kwargs: Any)-> None:

message.set("tokens", self.tokenize(message.get("spacy_doc")))

def tokenize(self, doc: 'Doc')-> typing.List[Token]:

return [Token(t.lemma_, t.idx) for t in doc]

For your custom component to be part of the pipeline, you should reference it inside the nlu_config.yml, which is NLU pipeline configuration file. The required format for the name of the component is “module_name.class_name”, so it’s your module name followed by a dot and then your class name. This component will be then automatically referenced by the framework.

Below is the example of the pipeline configuration file with lemmatization component:

pipeline:

- name: SpacyNLP

- name: CustomComponent.Lemmatizer

- name: SpacyTokenizer

- name: SpacyFeaturizer

- name: RegexFeaturizer

- name: LexicalSyntacticFeaturizer

- name: CountVectorsFeaturizer

analyzer: "char_wb"

min_ngram: 1

max_ngram: 4

- name: DIETClassifier

epochs: 100

- name: EntitySynonymMapper

- name: ResponseSelector

epochs: 100

You can further extend the component and even define the config for your component in the same way as for the pre-defined components, e.g.

- name: MyCustomComponent

param1: "test"

param2: "second param"

The constructor of your component should accept a dictionary as parameter:

def __init__(self, component_config: Optional[Dict[Text, Any]] = None) -> None:

if component_config is not None:

param1 = component_config.get("param1")

# ...

super().__init__(component_config)

Then you can train the Rasa NLU model with the custom component and test how it performs.

A/B testing your NLU Pipelines

To capitalize on your training data, you should train and assess your model on various pipelines and various measures of training data.

To do as such, you can provide multiple configuration files to the rasa test command:

rasa test nlu --nlu data/nlu.yml

--config config_1.yml config_2.yml

This plays out a few stages: Initially, it will create a global 80% train/20% test split from data/nlu.yml furthermore, exclude a specific level of data from the global train split. Then, train models for every configuration on the remaining data. Assess each model on the global test split.

The above cycle is rehashed with various rates of training data in step 2 to give you a thought of how every pipeline will carry on in the event that you increment the quantity of training data. Since training isn’t totally deterministic, the entire cycle is rehashed three times for every configuration indicated. A graph with the mean and standard deviations of f1-scores overall runs is plotted. You will find in the folder named nlu_comparison_results alongside all train/test sets, the trained models, f1-score diagram, classification and error reports. Investigating the f1-score diagram can assist you with comprehension on the off chance that you have enough information for your NLU model. In the event that the diagram shows that f1-score is as yet improving when the entirety of the training data is utilized, it might improve further with more data. However, on the off chance that f1-score has levelled when all data is utilized, adding more data may not help.

Also if you need to change the quantity of runs or exclusion percentages, you can:

rasa test nlu --nlu data/nlu.yml

--config config_1.yml config_2.yml

--runs 4 --percentages 0 25 50 70 90

Conclusion

This blog article talks about the RASA NLU pipelines, best practices and recommendations based on my day by day work with Rasa to totally custom-tailor the NLU pipeline to your individual prerequisites. Regardless of whether you have recently begun your contextual AI assistant development, need blasting quick training times, need to prepare word embeddings without any preparation or need to add your own components: Rasa NLU gives you the full adaptability to do as such.

In the following part, we will take it a stride further and talk about the RASA policies which will assist us with altering the RASA Core module. So, stay tuned.