Who cares about timeouts, right!? 90% percent of the time, or even more, all the servers are working… Giving us APIs, web pages and all kinds of network resources.

So why should we care? Well, if you like your code working only 90% of the time, you can stop reading here - this post is not for you.

But, if you like efficiency and building stuff that works - maybe (just maybe), you should keep on reading!

I’m going to use nodejs throughout this whole thing. (hope you are not disappointed (and if you are feel free to tell me in the comments))

How to make a http request?

Barebones

Here’s a basic example using the standard library:

const https = require('https');

let request = https.get('https://jsonplaceholder.typicode.com/posts/1', (response) => {

console.log(`HTTP/${response.httpVersion} ${response.statusCode} ${response.statusMessage}\n`)

console.log('Headers: ')

console.log('---------')

for (key in response.headers) {

console.log(key, '=', response.headers[key]);

}

let data = '';

response.on('data', (chunk) => {

data += chunk;

});

response.on('close', () => {

console.log('\nBody')

console.log('----------------')

console.log(data);

});

});

request.end();

You can find many examples like that all over the internet with an easy web search.

From what I looked at, none of those examples includes the use of a timeout.

I think that probably it’s OK since there’s a default time out defined somewhere in that library.

Should be somewhere between 30 seconds and 120 which is generally popular.

Hmmmm…. I couldn’t find the default value in the documentation. I browsed

here and

here, but nothing there about defaults.

Also, the only timeout I can set is a socket timeout…. Which is what exactly?

The

docs say: “A number specifying the socket timeout in milliseconds. This will set the timeout before the socket is connected.”

What I understand from that sentence, is that the timeout is for the duration between the opening of the socket and actual connection of the socket.

I tried to induce it using my favorite networking swiss army knife netcat and then pointing my js code to it like this:

$ nc -n -lv 127.0.0.1 8443

But I waited and waited, but nothing happened :) Guess it’s not as easy as I thought.

Using a library

So I looked at

The Top Node.js HTTP Libraries in 2020 and it’s first mention is

axios, A promise based HTTP client for the browser and node.js.

This library has around 9000 (!) lines of javascript code, which seems like a whole lot for handling http requests.

Alright, let’s see how axios handles my unresponding netcat server.

This is my code now:

const axios = require('axios').default;

axios.get('https://127.0.0.1:8443')

.then(function (response) {

// handle success

console.log(response);

})

.catch(function (error) {

// handle error

console.log(error);

})

.then(function () {

console.log('always executed')

});

And again I run my netcat server:

$ nc -n -lv 127.0.0.1 8443

And nothing happens for quite a while. So, if this was a “real life situation”, I guess my users would just sit there and wait!

Let’s try to explicitly set a timeout:

...

axios.get('https://127.0.0.1:8443', {timeout: 1000})

...

Yay, we got an error! (quite ugly)

Error: timeout of 1000ms exceeded

at createError (/timeouts/node_modules/axios/lib/core/createError.js:16:15)

at RedirectableRequest.handleRequestTimeout (/timeouts/node_modules/axios/lib/adapters/http.js:280:16)

at RedirectableRequest.emit (events.js:315:20)

at Timeout._onTimeout (/timeouts/node_modules/follow-redirects/index.js:166:12)

at listOnTimeout (internal/timers.js:549:17)

at processTimers (internal/timers.js:492:7) {

config: {

url: 'https://127.0.0.1:8443',

method: 'get',

headers: {

Accept: 'application/json, text/plain, */*',

'User-Agent': 'axios/0.21.1'

},

transformRequest: [ [Function: transformRequest] ],

transformResponse: [ [Function: transformResponse] ],

timeout: 1000,

adapter: [Function: httpAdapter],

xsrfCookieName: 'XSRF-TOKEN',

xsrfHeaderName: 'X-XSRF-TOKEN',

maxContentLength: -1,

maxBodyLength: -1,

validateStatus: [Function: validateStatus],

data: undefined

},

code: 'ECONNABORTED',

request: Writable {

_writableState: WritableState {

objectMode: false,

highWaterMark: 16384,

finalCalled: false,

needDrain: false,

ending: false,

ended: false,

finished: false,

destroyed: false,

decodeStrings: true,

defaultEncoding: 'utf8',

length: 0,

writing: false,

corked: 0,

sync: true,

bufferProcessing: false,

onwrite: [Function: bound onwrite],

writecb: null,

writelen: 0,

afterWriteTickInfo: null,

bufferedRequest: null,

lastBufferedRequest: null,

pendingcb: 0,

prefinished: false,

errorEmitted: false,

emitClose: true,

autoDestroy: false,

bufferedRequestCount: 0,

corkedRequestsFree: [Object]

},

writable: true,

_events: [Object: null prototype] {

response: [Array],

error: [Array],

socket: [Function: destroyOnTimeout]

},

_eventsCount: 3,

_maxListeners: undefined,

_options: {

maxRedirects: 21,

maxBodyLength: 10485760,

protocol: 'https:',

path: '/',

method: 'GET',

headers: [Object],

agent: undefined,

agents: [Object],

auth: undefined,

hostname: '127.0.0.1',

port: '8443',

nativeProtocols: [Object],

pathname: '/'

},

_ended: true,

_ending: true,

_redirectCount: 0,

_redirects: [],

_requestBodyLength: 0,

_requestBodyBuffers: [],

_onNativeResponse: [Function],

_currentRequest: ClientRequest {

_events: [Object: null prototype],

_eventsCount: 2,

_maxListeners: undefined,

outputData: [],

outputSize: 0,

writable: true,

_last: true,

chunkedEncoding: false,

shouldKeepAlive: false,

useChunkedEncodingByDefault: false,

sendDate: false,

_removedConnection: false,

_removedContLen: false,

_removedTE: false,

_contentLength: 0,

_hasBody: true,

_trailer: '',

finished: true,

_headerSent: true,

socket: [TLSSocket],

connection: [TLSSocket],

_header: 'GET / HTTP/1.1\r\n' +

'Accept: application/json, text/plain, */*\r\n' +

'User-Agent: axios/0.21.1\r\n' +

'Host: 127.0.0.1:8443\r\n' +

'Connection: close\r\n' +

'\r\n',

_onPendingData: [Function: noopPendingOutput],

agent: [Agent],

socketPath: undefined,

method: 'GET',

insecureHTTPParser: undefined,

path: '/',

_ended: false,

res: null,

aborted: true,

timeoutCb: null,

upgradeOrConnect: false,

parser: [HTTPParser],

maxHeadersCount: null,

reusedSocket: false,

_redirectable: [Circular],

[Symbol(kCapture)]: false,

[Symbol(kNeedDrain)]: false,

[Symbol(corked)]: 0,

[Symbol(kOutHeaders)]: [Object: null prototype]

},

_currentUrl: 'https://127.0.0.1:8443/',

_timeout: Timeout {

_idleTimeout: 1000,

_idlePrev: null,

_idleNext: null,

_idleStart: 117,

_onTimeout: [Function],

_timerArgs: undefined,

_repeat: null,

_destroyed: true,

[Symbol(refed)]: true,

[Symbol(asyncId)]: 11,

[Symbol(triggerId)]: 6

},

[Symbol(kCapture)]: false

},

response: undefined,

isAxiosError: true,

toJSON: [Function: toJSON]

}

always executed

Cool, now we have something that can work with.

For future generations:

Does axios library have a default request timeout?

No.

How to set http timeout using axios?

Pass a config object with the key timeout.

axios.get('https://127.0.0.1:8443', {timeout: 1000})

Choose your timeout

So, how should we choose the value for the timeout?

A lot of people just use some number between 30 and 90 seconds, which is pretty much reasonable.

But what is the logic behind it?

First and foremost, we don’t want to wait forever (that’s pretty basic).

Other than that, at some point when a server doesn’t answer we want to “give up” and either retry or throw some error message somewhere.

The timeout should be longer than the maximum duration that the server usually takes to answer, plus some buffer.

So, if a server usually takes a maximum of 5 seconds to answer, we can assume if it doesn’t answer in 15 seconds something went wrong.

This is really boring in a world where your client communicates with only one server. In those cases it doesn’t really matter when you timeout. You are probably better off setting your timeout to 60 and if the server is busy, your client will “wait in line”. In case there’s something wrong with your server, you got nothing to do about it anyway.

The more interesting scenario, is when there’s multiple servers sitting behind a load balancer. Take this diagram as an example:

In case your client is routed to server B, but there is something wrong with it (and it doesn’t answer or take a long time), you are better off giving up and retrying - hoping to get a different server who isn’t stuck.

Dynamic timeout

So after understanding the logic behind timeouts, we can check out a new idea. Dynamic timeouts!

NOTE I have never used this technique in production. Use at your own risk!

So first of all we need to add retries to our client code.

async function doRequest(numRetries = 10) {

for (let i = 0; i < numRetries; i++) {

let hadErr = false;

await axios.get('http://127.0.0.1:8888', { timeout: 5000 })

.then(function (response) {

// handle success

console.log(response.data);

})

.catch(function (error) {

// handle error

hadErr = true;

console.log(error);

})

.then(function () {

wait = false;

});

if (!hadErr) {

return; // no errors - no need to retry

}

}

}

I put the request in an async function so we can rely on hadErr being there when axios’s promise returns.

Next we need to implement an object to record our requests times.

class requestTimeTracker {

max = 5000; // set our initial time out to 5 seconds

times = [];

numSamples = 10;

requestTime(reqTime) {

if (reqTime > this.max) {

this.max = reqTime;

}

this.times.push(reqTime);

if (this.times.length > this.numSamples) {

this.times = this.times.slice(this.times.length - this.numSamples)

}

}

average() {

if (this.times.length < this.numSamples) { // in case there is no average yet

return this.max; // return the max so we'd be safe (not 0 as timeout)

}

let times = this.times.slice(); // copy our array so there's no funny stuff

let total = 0;

for (let i = 0; i < times.length; i++) {

total += times[i];

}

return total / times.length;

}

maxRequestTime() {

return this.max;

}

}

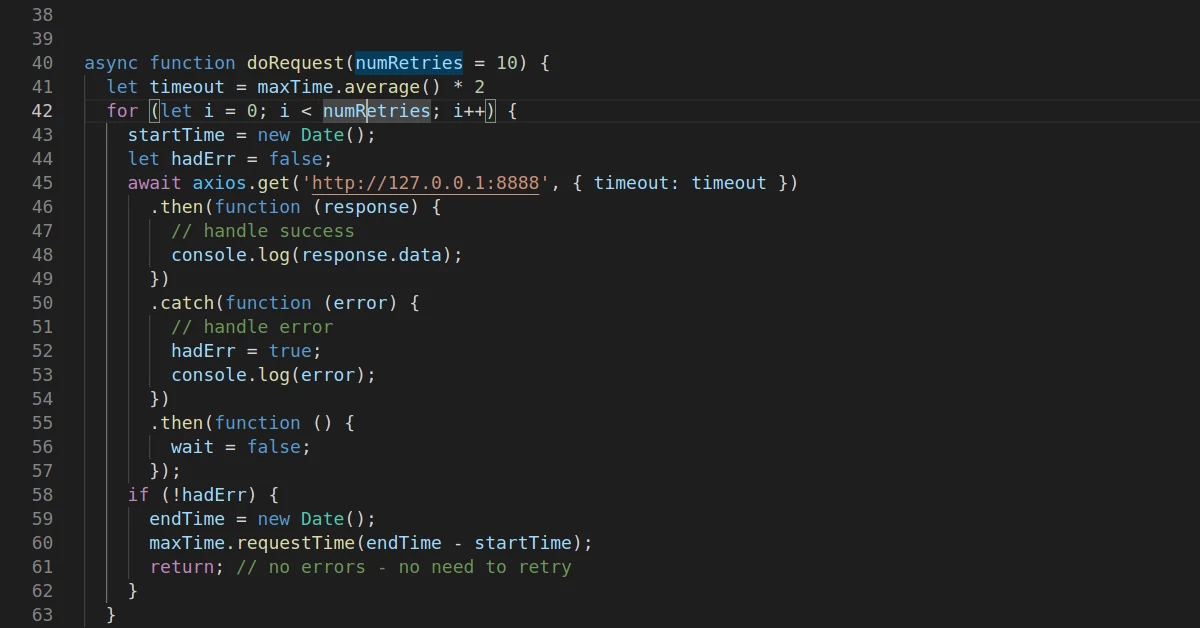

And now we can use the recorder class in our request function.

...

let maxTime = new requestTimeTracker();

async function doRequest(numRetries = 10) {

let timeout = maxTime.average() * 2

for (let i = 0; i < numRetries; i++) {

startTime = new Date();

let hadErr = false;

await axios.get('http://127.0.0.1:8888', { timeout: timeout })

.then(function (response) {

// handle success

console.log(response.data);

})

.catch(function (error) {

// handle error

hadErr = true;

console.log(error);

})

.then(function () {

wait = false;

});

if (!hadErr) {

endTime = new Date();

maxTime.requestTime(endTime - startTime);

return; // no errors - no need to retry

}

}

}

...

And finally call our function in a loop, so it can call your server and calculate.

...

async function main() {

for (let i = 0; i < 200; i++) {

await doRequest();

console.log('Average request time: ', maxTime.average());

}

}

main();

...

Here is my super simple server for testing.

const { time } = require("console");

const delay = async ms => new Promise(resolve => setTimeout(resolve, ms))

var http = require("http"),

port = process.argv[2] || 8888;

http.createServer(function(request, response) {

delay(200).then(function () {

response.writeHead(200, {"Content-Type": "application/json"});

response.write('{"userId": 1, "id": 1, "title": "optio reprehenderit"}', "json");

response.end();

});

}).listen(parseInt(port, 10));

The requestTimeTracker records the ten last requests (can be 200 or 50 or whatever) and lets you calculate the average request time using the average function.

I then double that average to set the timeout for the request.

Closing words

Of course there’s a lot of improvements you can make to this code, and maybe this whole thing isn’t even really necessary. But a few days ago, I opened an app on my phone (which I will not name, but ends with y.. take a wild guess) and it took forever to load. I just sat there and stared at the loading animation thinking - “are their servers down?”, “Is there a bug in their app code?”, “Did someone forget to set the timeout?”. Well, I closed and reopened the app and it started working again. Who knows what the issue was? Maybe it was my phone.. Maybe there was no reception.. Who knows?

So, in conclusion - timeouts are important (and a bit boring). In the end the timeout you define in your code, is the time that your users are going to spend staring at the loading animation.